How can what engineers learn from how barn owls pinpoint the location of the faintest sounds apply to their development of nanotechnologies capable of doing even better? In episode 61, we’re joined by Saptarshi Das, a nano-engineer from Penn State University, who talks with us about his open-access article “A biomimetic 2D transistor for audiomorphic computing,” co-authored with Sarbashis Das and Akhil Dodda, and published on August 1, 2019 in the open-access journal Nature Communications.

Patrons of Parsing Science gain exclusive access to bonus clips from all our episodes and can also download mp3s of every individual episode.

Saptarshi Das: that’s a fascinating aspect: that you can learn from nature which has already, you know, fine-tuned these kind of neurobiological devices for the survival of these animals.

Ryan Watkins: This is Parsing Science the unpublished stories behind the world’s most compelling science as told by the researchers themselves. I’m Ryan Watkins.

Doug Leigh: And I’m Doug Leigh. Today, in episode 61 of Parsing Science, we’re joined by Saptarshi Das from Penn State University. He’ll discuss his research into engineering a device for determining a sounds location that’s inspired by the way barn owls precisely determine where sound is coming from to track their prey in the dark … And his device is so small it exists only in two, rather than three, dimensions. Here’s Saptarshi Das.

Das: Hi, this is Saptarshi Das. And I was actually born in India, in a town called Kolkata. Most of my studies in high school and under graduation took place in Kolkata. I graduated with a degree in electronics and telecommunication engineering from Jodhpur University. And after that I directly came to the United States to pursue my doctoral degree. I joined Purdue University, the Electrical and Computer Science Department. And there I was mostly working on micro and nano-electronics, working with new materials, novel devices, and trying to resolve some of the critical issues that we face with energy efficiency of electronic devices. I finished my PhD degree in 2013 from Purdue, and after that I moved to Argonne National Lab for a postdoctoral study. I joined Penn State in 2016 as an assistant professor in the Department of Engineering Science and Mechanics. And it’s already been like three and a half years now. In Penn State we are currently working on novel devices for the next generation of computing, because there are some severe limitations that we are currently facing. And we [are] using new materials – mostly the two-dimensional materials – and devices based on them to resolve those issues.

Watkins: Sometimes the best solutions to problems aren’t always the most complex, nor are the best answers necessarily new ones. And that evolution of the natural world provides millennia of evolutionary trial and error experiments from which we can learn. Saptarshi’s research lab focuses on developing nanodevices which often seek solutions to human challenges by imitating processes found in nature. We started our conversation by asking Saptarshi how he got interested in this design philosophy, called biomimicry.

Interest in biomimicry

Das: We human beings essentially use like five senses, right? We have vision, we have auditory skill. We have sense of smell, touch … But then if you think about the animals, they can actually do way better. For example, spiders can detect, you know, micro vibrations. The jewel beetle can sense radiation; infrared radiation. In fact, bees can sense Earth’s magnetic field. Sharks can detect, you know, electric fields which are as weak as nano-volt. And this is something that they do, you know, seamlessly and they do it because they need to survive. And what has happened in these animals is that they have evolved were millions of years, and they have evolved their sensory organs as well as their neurobiological architecture in such a way that they can do these high-precision tasking, because that is important for their survival. For the humans what has happened is that in humans once or survival has been taken care of we have actually moved on to develop our more intellectual skills. That’s why we have become painters, we have become artists, we write poems, and things like that. But the animals have really tuned their neurobiological system more towards the survival aspect, you know? And that’s why they can do something way better than the human beings. And that’s a fascinating aspect: that you can learn from nature which has already, you know, fine-tuned these kind of neurobiological devices for the survival of these animals. And can we learn from them, and can implement those in solid-state devices, to make our sensors and devices more smarter and more high-precision. One of the examples is [what] this paper is all about, right? This barn owl, the barn owl, can actually hunt in complete darkness, you know? And it doesn’t have a good vision, so therefore it uses its auditory skill, you know – auditory information processing skills. But this is something which is [unimaginable] for a human being: to find something in complete darkness. And there are several other animals which can do things, you know, which the human beings cannot do. So the computation that goes on into the brain of these animals is very, very interesting. And that kind of motivated us to look into, you know, these kind of information processing in the animal brain, and how do they do their computations to get the high precision tasking done?

[ Back to topics ]

How auditory information processing works

Leigh: We can all probably remember back to learning about how having two ears allows animals to have stereoscopic hearing capable of localizing the direction a sound is coming from. For example, if you’re wearing headphones right now you should have no problem hearing these rattles being moved from right to left around your head [listen here]. While we might also be familiar with how the hammer, anvil, and stirrup bones amplified sounds in the inner ear, we may not have learned how the brain processes these neural signals. So, Ryan and I asked our Saptarshi to explain how auditory information processing works.

Das: Depending upon the species that you’re talking about, so it’s different for avians or mammals, but it seems like the barn owl uses a very simplistic approach where it mostly uses the timing difference between the sound waves reaching the two ears for the processing of information of which direction the sound is coming from. So let me try to be a little bit more basic of how the information processing takes place in an owl. The reason that we have got the two ears on the two sides of our brain is that by doing so, there will be a difference in time from the sound which is reaching the two ears. Doesn’t matter which direction is coming from, there will be a time delay because the distance will be two ears from the sound source are going to be slightly different. So, if you think about a sound coming from the left side, then this sound will probably reach the ear on the left side earlier, and then it will reach the right ear. Now, depending upon the size of the head of the particular species, this time difference could be anywhere from hundreds of microseconds to a millisecond, you know? For example, if you are talking about a barn owl, then the difference would be hundreds of microseconds. But if you’re talking about an elephant, which has a much bigger head size, it could be even a millisecond. But no matter what, the problem in auditory information processing is that this time difference is very, very small. Because [the] neurons in our brain can only fire every few milliseconds. So neuronal operation is actually pretty slow. So now you are asking the neurons – which can fire only few milliseconds – to process information which occurs every hundred microseconds. Which is 10 times faster. Which is actually very difficult, right? So, the neurons will not be able to recognize something that is happening faster than they’re working on. So, this is exactly where the intelligence aspect of the design of the neuronal brain stem comes into the picture. What the brain does, you know – or what it has done through millions of years of evolution – is that it has converted this temporal information into a spatial map. So, what it does is it actually [has these] neurons coming from two sides of the ear and meeting at the central brain stem. And the length of the neurons, you know, from both sides of the ear are actually different. And they have multiple differing lengths, so that the information that reaches the right ear first and left ear later coincide at a particular neuron. Because there is a path difference between the two. While the one which is reaching the two ears at the same time, they coincide at a point where the two neuronal lengths are the same. Just by making the length of the neurons different, they can actually capture this time difference. So, they convert this temporal information into a spatial map. Now, this is a very simple way of converting information from time domain to space domain. And then it can use that spatial information to figure out which direction the sound is coming from. And that is pretty much prevalent in the entire animal kingdom. So that’s how the neurons – actually using a special neurobiological architecture – process the auditory information.

[ Back to topics ]

Three components of sound localization

Watkins: The animal able to hear the highest frequencies is the greater wax moth. It was long thought that they had evolved this ability to hear the ultrasonic sonar emitted by their biggest predator: bats. Especially since this hypothesis was debunked by a team of scientists led from the University of Florida earlier this month, Doug and I wondered what makes the auditory cortex of the barn owl the standard by which excellence in hearing is gauged.

Das: I don’t think the barn owl can be considered the standard, but it can be a clue towards auditory information detection when it comes to directionality, you know, which direction is [it] coming from? So, there are three components in the sound wave, right. One is which direction it’s coming from. The other is what is its intensity, which will tell you how far this sound source is. And the third component is the frequency, which will tell you what kind of sound source the information is coming from. And it depends upon: what do you want to detect? You want to detect the direction? You want to take the distance? Or do you want to detect the type of the sound source? Or you may actually want to redirect all three. So, for each of these aspects the neural engineering is a little bit different, a little bit tweaked, in order to capture that aspect. Because one particular animal doesn’t need to capture all three aspects, it is really not important for it. And, therefore, different animals depending upon which environment they live in, and what is their prey, and what is their predators? They kind of develop this thing, you know, over the evolution period of millions of years. But by learning from each of these animals, right, from the barn owl, you know, from the bees … You can then start putting things together in an integrated circuit which can do everything, right? That’s your ultimate goal: to make a smart chip which will be as good in hearing like a barn owl. As good in vision maybe like an octopus, because octopus actually really have a very good vision. As good in detecting micro vibration like a spider. Or as good in detection, you know, radiation like a jewel beetle. So, you learn from each of these individual animals, and then you put all these elements together to make the smartest chip that you possibly can imagine.

[ Back to topics ]

Applying biomimetics to nanotechnologies

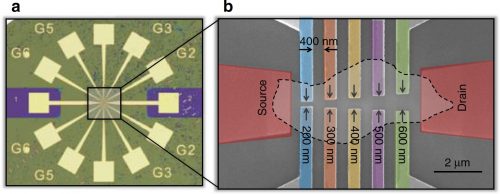

Leigh: Saptarshi and his team created a device comprised of resistors and capacitors – something called an RC circuit – but at a nano-scale just atoms in thickness, qualifying it as being two rather than three-dimensional. The device was designed to mimic mathematical models of animals’ sound localization systems developed in the 1940s by the acoustical scientist Lloyd Jeffress. So, we were interested in learning how and why he was motivated to apply that model to this 2D device.

Das: There are two types of neurons which actually do this operation. One neuron which is called the delay neuron. So, they delay the temporal information so that they can process it. And the other one is called the co-incidence detector neuron, which actually detects the co-incidence of the signal occurring at the same neuron, so that to figure out where these coincident occurred and therefore which direction the sound is coming from. When we worked with the 2D devices we found that if we make the particular device structure – which we call this split gated geometry – we can actually capture this co-incidence aspect of this neuron. Because the way the transistor operate is just like a switch, you know, so if you apply a particular bias the transistor is on if you apply a different bias the transistor will be off. Now, typically a general transistor just [has] one gate which controls its state. But instead we thought of coming up with two gates, so that the transistors could be off only when both the gates are actually in the highest state: so that both gates are allowing the transistor to be off. And the same thing happens when the gates are actually in the lower state, the transistor is essentially on. So, we can create this co-incidence aspect just by splitting one gate into two on top of a 2D transistor, and thereby capture or mimic the co-incidence neuron in the barn owl. And then we thought about the delay neurons. And the delay neurons are actually much more like an RC circuit. Because whenever you do circuits … So, if you have a resistance and a capacitive component, that always will act as a delay element. So, we kind of thought about using a 2D transistor and a gate capacitor and combine them and come up with this RC circuit, which kind of mimics the delay neurons. And then we kind of combine both aspects – delay neuron and the co-incidence neuron – through this RC circuit and the split gated transistor to mimic how the neurobiological architecture in the brain of the barn owl works for sound localization.

[ Back to topics ]

simultaneously functioning as delay and a co-incidence neuron

Watkins: Saptarshi’s biomimetic device is unique in that it combines digital and analog computation, which is abundant in biological neural networks. Its resistors provide the circuit’s digital functionality by working in pairs to determine if one of the five computational neurons is switched on or off. Its analog functionality is accomplished by both the spacing between the circuit’s contact points, along with a gate which tunes the device by altering the conductivity between those points, mimicking the way in which animals can be more attentive when necessary to localize a sound’s direction, and less so when not. We asked Saptarshi how the device functions both like a delay and a co-incidence neuron.

Das: This Jeffress model of sound localization has got these two types of neurons, delay neurons and co-incidence neuron, right? The delay neurons are essentially captured by our RC components in the device, so a resistance and the capacitance. The resistance comes from the resistance of the 2D channel material, while the capacitance comes from the capacitance of a dielectric material which is used as the gate. And then we have this co-incidence neuron which is essentially determining whether there is a signal or not, and that aspect is captured by the split gated transistor. So, the two RC neurons, you know, they kind of converge on to one co-incidence neuron, and if there is a signal on these two delay in your own at the same time, then the co-incidence neuron typically fires. In a similar way, you know, you have this RC components connected to two split gates, and whenever the two split gate receives the signal at the same time, you know, that part of the transistor becomes off. So that’s how we kind of capture the co-incidence new run by a single split gate. But if you think about the Jeffress model there are actually multiple co-incidence neuron to determine which direction the sound is coming from. Because you have to kind of convert the space temporal information into a spatial map therefore by noting which co-incidence neuron fire you can tell whether the sound came from the right or from the left or from the front. That aspect is covered in our circuit by making – instead of making one split gate, multiple split gates – but each split gate is actually having a different separation between the two. So that they can kind of capture the analog aspect of this current transporting this 2D channel.

[ Back to topics ]

Working with 2D nanomaterials

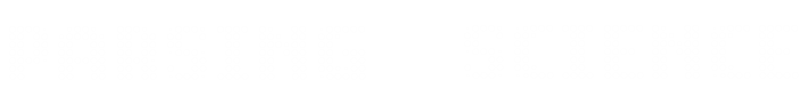

Leigh: The activation of neurons related to delay and co-incidence are essential for how the barn owl locates the direction from which the sound of its prey came. Mimicking this in a 2D nano transistor required building multiple split gates with gaps of varying widths to determine which of its digital neurons fired when a sound triggered the electrical charge. Here Saptarshi explains how he and his team were able to manipulate nano-materials to create such circuits.

Das: One of the interesting aspects about these two-dimensional materials is that … let’s say I have one-centimeter by one-centimeter chip, you know, and I put this 2D material which is one layer or two layers in thickness on top of that. Then you can just go and look under the optical microscope and then these different layers actually show up in different contrast. So, their colors will be very different, you know, a very thin flake may look very brown, but if the thickness is a little bit bigger then you will probably get much more like a bluish appearance. If it’s very thick it will be more yellowish. So just by an optical inspection you can get a first approximation of what are their thickness: whether it’s a mono-layer, whether it’s like in the range of like five to ten layers, or if it’s like a hundred layers. So now once you have that initial estimation, then you can take that sample and look under the atomic force microscopy – which is much more precise measurement of the actual thickness – and kind of verify whether the thickness that you predicted by optical inspection is correct or not. And you also get the information about their precise thickness. Now once, you know, those thicknesses then you can select which of the flakes you want to work with, and then you go ahead and do some lithography … which we call electron beam lithography, where we work with an electron microscope. And we go ahead and put the contacts, and then we evaporate metal, again, using electron beam evaporation technique. And we kind of start fabricating these devices. At Penn State we have this clean room facility which has got all this nano-fabrication tools which involves, you know, any optical and electron beam lithography. It has evaporators, either thermal sputtering or electron beam evaporation. It has all the etching tools. So, we have a centralized facility at Penn State which helps us to then, you know, make the devices with [these] low dimensional materials, these 2D materials.

[ Back to topics ]

How 2D nanomaterials might reinstate Moore’s Law of Scaling

Watkins: Probably the best-known version of Moore’s Law – named after Gordon Moore, the current CEO of Intel – is that the number of transistors in a computer chip doubles on a scale of every two years or so. 2D devices stand to drastically shrink the size of computers’ integrated circuits. But there’s more to a computer chips evolution than the scaling of their size. So, Doug and I were curious how this relates to Saptarshi’s interest in developing 2D nano-transistors.

Das: If we think about the revolution that has taken place in the silicon industry … so, we started with devices which were really very, very large. Large in the sense of like tens or hundreds of micrometer silicon transistors were made back in 1960s and 70s. And then what has happened over the last 50 years is this huge revolution in this silicon semiconductor industry, where we have made the devices smaller and smaller. And the reason for that is if you have more devices on the same chip, then you can do more operations, or more functionalities and we added. And this is something which we all know about: the Moore’s Law of scaling. Essentially you just keep scaling the transistor dimension, you get more and more transistors on the same chip, so therefore you can do more and more computation. So there are essentially three quintessential aspects of scaling, you know: size scaling, which make the device a smaller; energy scaling, which makes sure that the power budget is constrained; and complexity scaling to make sure that we can do, you know, hard computational problems on chips. Now this continued almost for 50 years, you know, and this is what we call the Golden Era of Scaling or the Dennard Scaling Era. Now in 2005 the first wall was hit, actually, and when we could not anymore scale the energy consumption by a device, the energy scaling essentially stopped – and there’s a fundamental limit on which [these] transistors actually operate – which stopped the energy scaling. But the size scaling still continued for another ten years so – with a lot of problems as well – but then in 2017 or 18, the size scaling also stopped. So, currently if you think about the technology known, it’s like ten nanometer technology. And beyond that, nobody knows what’s is going to happen. So, the size scaling aspect of the computation and revolution has also stopped. And, finally, since these two aspects have stopped, the complexity scaling has also kind of ended. So, all the three scaling aspects which we are giving us more and more computational power have essentially stopped. We have to come up with some strategies to revive all three aspects, and this is exactly where the 2D materials come in.

Leigh: We’ll hear how after this short break.

ad: SciencePods.com

[ Back to topics ]

Strategies for reinstating size, energy and complexity scaling

Leigh: Here again is Saptarshi Das.

Das: Silicon is a bulk material, so if you really want to make something very small, you also have to make it very thin. And if you make silicon very thin, then there are lots of quantum mechanical aspects which actually degrades the property of silicon, because it’s a bulk material. On the other hand, the 2D materials – by their nature, by their inherent nature – are a single atomic layer, or few atomic layers. So, they are really not constrained by the quantum mechanics, and therefore they can be scaled much more aggressively than the silicon technology. So that is one of the primary aspects: that moving from silicon to 2D material may actually help us to reinstate the size scaling. Now, when it comes to the energy scaling – this is exactly where we’re actually getting the motivation from the brain. So, think about the amount of information that any animal brain actually processes, you know? And we humans typically have got billions of neurons, and each neuron is connected to, you know, at least 10,000 other neurons – which are called the synapses – where exactly the information processing takes place. If I think about trillions of, you know, connections and computations going on in brain every passing second, it still consumes a very, very small amount of energy. Like 20 watts of energy. If the same amount of computation has to be done by a supercomputer today, you know, it will take almost like 1 megawatt of energy. That means that our supercomputers – even if we continue the size scaling and make more and more computational power – they will still be consuming 6 to 7 orders of magnitude higher energy than the human brain. So, there is something to be learned from the information processing in the brain that can make these devices energy efficient, and thereby we could actually reinstate the energy scaling aspect. And at the same time, brain is, you know, among humongously complex. So, the complexity scaling could also be reinstated by learning from brain. And what we found is that, you know, these two-dimensional materials have some unique electronic and optoelectronic properties which we can harness in order to kind of mimic the neurobiological aspects of these different animal brains. So that’s how the 2D material, you know, and the biomimicry comes to the rescue of scaling the transistor dimension down, making them energy efficient, and at the same time do much more complex operation seamlessly.

[ Back to topics ]

Why delay neurons are necessary anyow

Watkins: It seemed to Doug and me that “faster” would be “better” when it comes to processing the directionality of sound. So, we were interested in learning why it’s necessary to have neurons that delay the arrival of sound in the first place.

Das: If you think about the fact that the way the information propagates through typical circuits: you know? I mean, we are talking about the wires, where we are talking about electrons talking at a very high speed, almost close to the speed of light. In a normal circuit, that’s what you really want: [that] information should be processed extremely fast, within nanoseconds to even picoseconds. But if you do that, the length of the wire that we will need in order to kind of capture this time difference will be of the order of kilometers. Okay? And, therefore, you need to slow down the velocity of this wave propagation through these wires in order to capture different time delays. And this is what we do with the RC component. Now, for the barn owl, it has already got some neurons, and it has a particular head size, and it needs to only listen to sound of its prey, right? When it’s moving, or whenever it’s doing something. And, therefore, for barn owls, a delay of the order of, you know, hundreds of microseconds or a millisecond is good enough. And that is something we can really capture in the RC circuit. So, we can now tune the R and C values to change our delays from anywhere from a nanosecond to a millisecond. Therefore, for us: we can actually capture any frequency in the device. But for the barn owl, it is only the auditory frequencies, because that helps it in its survival. And when this survival aspect is taken care of, it doesn’t really care about fine-tuning it. But since we are using this 2D materials, we are using nanotechnology: we have all the tools to manipulate the device dimensions, the resistance capacitance, and, therefore, we can actually work with the entire electromagnetic spectrum. So that’s where we learn from nature, and then try to become better than nature.

[ Back to topics ]

Besting the barn owl

Leigh: While the barn owl excels in its ability to determine the location of sound and complete darkness, Ryan and I wondered if it might be possible for Saptarshi’s device to exceed the fidelity of barn owls hearing. Here’s what he had to say about the question.

Das: What we have demonstrated … you know, it depends upon the number of split gates that we have. We used five, because it was a proof-of-concept demonstration. And, in fact, with five, we are actually not better than barn owls. But how we can become better than barn owl is by adding more split gates. Now, that is not any limitation, because you just have to – when you fabricate the device – you just put instead of five, you put just 50, you know? And we can easily do that. The barn owl only needs to detect things within two- to three-degree precision, but by adding more and more split gates, we can be even more precise than the barn owl. You know, you can actually get to a level where the precision is way better than the barn owl. And then you can use it for any kind of a navigational sensor. So, we learned it, and that is [why] we say, you know, “now we can design our systems to be performing way better than the barn owl.”

[ Back to topics ]

Potential applications of Saptarshi’s device

Watkins: Saptarshi’s tiny device seemed to us to have a wide range of possible uses, such as enhancing existing assistive devices – like hearing aids – or augmenting listening devices used to detect and locate victims trapped in debris following natural disasters. So we wrapped up by asking Saptarshi what he sees as the device’s potential applications.

Das: One of the major applications will be any kind of a navigational sensor, right? You could use it for commercial or military purposes. For example, if you want to deploy it in kind of remote locations, and if you want to figure out which direction something is moving, you know? It could be human movement, it could be movement of, you know, military instruments. If you could precisely figure it out from a long distance – the exact direction these movements are taking place – that will definitely give you a lot of aid when it comes to, you know, defense applications. The same things applies for navigation in ships or in airplanes: you could actually figure out signals coming from different directions in a much precise way. It could also be used for underwater, you know, sonar application where you are trying to find something. So, anything that has to do with navigation, this kind of a technology will be very useful.

[ Back to topics ]

Links to article, bonus audio and other materials

Leigh: That was Saptarshi Das, discussing his open access article “A biomimetic 2D transistor for audiomorphic computing,” which he published with Sarbashis Das and Akhil Dodda in the journal Nature Communications on August 1st, 2019. You’ll find a link to their paper at parsingscience.org/e61, along with bonus audio and other materials we discussed during the episode.

Watkins: You probably already know about Parsing Science’s website, weekly newsletter, and our toll-free message line. But did you know that we also tweet news about the latest developments in science … including many brought to our attention by listeners like you? Follow us @parsingscience, and next time you spot a science story that fascinates you, let us know, and we might just feature the study’s researchers in a future episode of the show.

[ Back to topics ]

Preview of next episode

Leigh: Next time, in episode 62 of Parsing Science, will be joined by Dimitris Xygalatas, from the University of Connecticut’s Department of Anthropology. He’ll talk with us about his research into an extreme ritual involving pain and suffering, but that has no discernible long-term harmful effects on its practitioners. It may actually positively impact their psychological well-being.

Dimitris Xygalatas: A lot of these very extreme rituals that involve a lot of pain and suffering … And a lot of behaviors that might be seen as posing direct risks to health – things like the possibility of infection, or physical trauma, or psychological trauma, and so forth – a lot of them are actually culturally-prescribed remedies for a number of illnesses. And especially those illnesses that don’t have clear physical manifestations, so things like mental illness.

Leigh: We hope that you’ll join us again.

[ Back to topics ]

Next time, in episode 62 of Parsing Science, we’ll be joined by Dimitris Xygalatas from the University of Connecticut’s Department of Anthropology. He’ll talk with us about his research into an extreme ritual involving pain and suffering … but that has no discernible long-term harmful effects on its practitioners, and may actually positively impact their psychological well-being.@rwatkins says:

Saptarhi’s tiny device seemed to us to have a wide range of possible uses, such as enhancing existing assistive devices like hearing aids or augmenting listening devices used to detect and locate victims trapped in debris following natural disasters. So we wrapped up by asking Saptarshi what he sees as the device’s potential applications.@rwatkins says:

While the barn owl excels in its ability to determine the location of sound in complete darkness, Ryan and I wondered if it might be possible for Saptarshi’s device to exceed the fidelity of barn owls’ hearing. Here’s what he had to say about the question.@rwatkins says:

It seemed to Doug and me that faster would be better when it comes to processing the directionality of sound, so we were interested in learning why it’s necessary to have neurons which delay the arrival of sound in the first place.@rwatkins says:

Probably the best-known version of Moore’s Law - named after Gordon Moore, the current CEO of Intel - is that the number of transistors in a computer chip doubles on a scale of every two years or so. 2D devices stand to drastically shrink the size of computers’ integrated circuits. But there’s more to computer chips’ evolution than scaling of their size. So Doug and I were curious how this relates to Saptarshi’s interest in developing 2D nano-transistors.@rwatkins says:

The activation of neurons related to delay and co-incidence are essential for how the barn owl locates the direction from which the sound of its prey came. Mimicking this in a 2D nano-transistor required building multiple split-gates with gaps of varying widths to determine which of its digital neurons fired when a sound triggered the electrical charge. Here, Saptarshi explains how he and his team were able to manipulate nanomaterials to create such circuits.@rwatkins says:

Saptarshi’s biomimetic device is unique in that it combines digital and analog computation, which is abundant in biological neural networks. Its resistors provide the circuit’s digital functionality by working in pairs to determine if one of five computational neurons is switched on or off. Its analog functionality is accomplished by both the spacing between the circuit’s contact points ... along with a gate which tunes the device by altering the conductivity between those points … mimicking the way in which animals can be more attentive when necessary to localize a sound’s direction, and less so when not. We asked Saptarshi how the device functions both like a delay and co-incidence neuron.@rwatkins says:

Saptarshi and his team created a device comprised just resistors and capacitors, called an RC circuit ... but at a nano-scale just atoms in thickness, qualifying it as being two - rather than three - dimensional. The device was designed to mimic mathematical models of animals’ sound localization systems developed in the 1940s by the acoustical scientist Lloyd Jeffress. So we were interested in learning how - and why - he was motivated to apply that model to this 2D device.@rwatkins says:

The animal able to hear the highest frequencies is the greater wax moth. It was long thought that they evolved this ability to hear the ultrasonic sonar emitted by their biggest predator: bats. Especially since this hypothesis was debunked by a team of scientists led from the University of Florida earlier this month, Doug and I wondered what makes the auditory cortex of barn owl the standard by which excellence in hearing is gauged.@rwatkins says:

We all can probably remember back to learning about how having two ears allows animals to have stereoscopic hearing capable of localizing the direction a sound is coming from. For example, if you’re wearing headphones right now, you should have no problem hearing these rattles being moved from right to left around your head ... While we might also be familiar with how the hammer, anvil and stirrup bones amplify sound in the inner ear, we may not have learned how the brain processes these neural signals, so Ryan and I asked Saptarshi to explain how auditory information processing works.@rwatkins says:

Sometimes the best solutions to problems aren’t always the most complex, nor are the best answers necessarily new ones. And the evolution of the natural world provides millennia of evolutionary trial-and-error experiments from which we can learn. Saptarshi’s research lab focuses on developing nanodevices which often seek solutions to human challenges by imitating processes found in nature. We started our conversation by asking Saptarshi how he got interested in this design philosophy, called “biomimicry.”