Might early hearing impairment lead to cognitive challenges later in life? Yune Lee from the Ohio State University talks with us in episode 30 about his research into how even minor hearing loss can increase the cognitive load required to distinguish spoken language. His open-access article “Differences in hearing acuity among ‘normal-hearing’ young adults modulate the neural basis for speech comprehension” was published with multiple co-authors in the May 2018 issue in eNeuro.

Websites and other resources

- Yune’s SLAM Lab

- Hearing Review article linking hearing loss to dementia

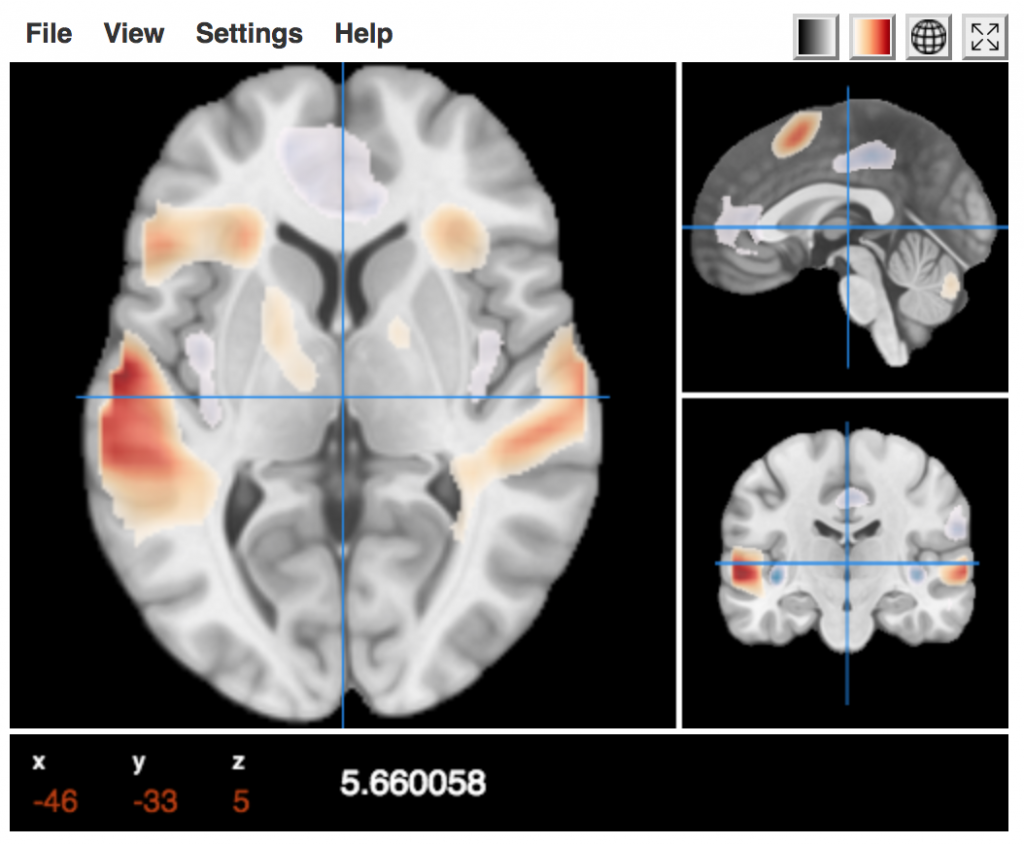

- Interactive fMRI images (T Maps)

- Sample T Map (as discussed in the episode) …

Press

EurekaAlert | Healthline | Medical News Today | MedIndia

Bonus Clips

Patrons of Parsing Science gain exclusive access to bonus clips from all our episodes and can also download mp3s of every individual episode.

Support us for as little as $1 per month at Patreon. Cancel anytime.

Please note that we’re not a tax-exempt organization, so unfortunately this gift isn’t tax deductible.

Hosts / Producers

Ryan Watkins & Doug Leigh

How to Cite

Watkins, R., Leigh, D., & Lee, Y. S.. (2018, August 21). Parsing Science – Hearing Loss and Cognition. figshare. https://doi.org/10.6084/m9.figshare.6994613

Music

What’s The Angle? by Shane Ivers

Transcript

Yune Lee: We were convinced that the right frontal area comes into play, even in with a very slight hearing decline.

Doug Leigh: This is Parsing Science: the unpublished story is behind the world’s most compelling science as told by the researchers themselves. I’m Doug Leigh.

Ryan Watkins: And I’m Ryan Watkins. While death and taxes may be the only certainties in life, it’s often the case that the older we get, the poorer our hearing becomes. Today, we’re joined by Yune Lee from the Ohio State University. He’ll talk with us about his research which suggests that hearing loss among younger people can tax their cognitive resources in ways that are typically not encountered until our 50s. Here’s Yune Lee.

Lee: Hi, my name is Yune Lee. I’m an assistant professor of chronic brain injury, and speech and hearing science department at the Ohio State University. So, I’ve been here for almost two years, and my main expertise domain is in the auditory neuroscience with speech, language and music. So, we study how speech and music are connected in the brain, by using fMRI or some other portable neuro imaging device called a functional near-infrared spectroscopy or fNIRS.

Read MoreLeigh: Yune is also a musician and previously was a commercial music director. However, after stumbling on to research regarding music processing in the brain, he decided to return to school completing his PhD in cognitive neuroscience at Dartmouth College. Ryan and I wondered what led to his interest in studying differences between younger and older adults’ hearing ability.

Lee: I was really fascinated by the way how the brain really, you know adjusts itself, to make sense of speech, sound, especially all the people’s brain. When they get older, their brain is going through some cognitive decline, also hearing decline as you may know, and the brain is actively adjusting itself. So, the right side of the brain typically is idling for language processing. As you know language is predominantly left-lateralized processing, but, the right side of the brain, sort of homologous, comes into play to compensate for sort of a cognitive and hearing decline which we typically see in people after age 50. But, in my recent study we were surprised to see that actually the right side of the brain is lighting up when they have a slight, like tiny, bit of hearing decline. That is so slight that they’re not even aware of, so that was the paper that we wrote about.

Watkins: Doug and I were also curious to learn what it is that’s known about the degree to which hearing loss is linked to dementia.

Lee: There’s a study done by Franklin in 2011, and, according to that study what it says is, compared with normal hearing, the ratio for dementia was 1.89 for mild hearing loss, 3.0 for moderate hearing loss, and 4.9 for severe hearing loss. So, it’s like twice more likely to have dementia for people with mild hearing loss, or three times more likely for moderate hearing loss, and sort of five times for severe to profound hearing loss. So, that’s what the study suggested, which was very shocking, but it doesn’t necessarily mean that there’s direct connection link between hearing loss and dementia. And I, you know, I had my grandmother who actually passed away last year age 88, but I was very surprised by her cognitive health. I mean she memorized, you know, phone numbers of my mom’s and aunt’s, and these days I don’t remember, right? I barely remember my phone number and a few others, but she had a lot of issues with hearing; I had to speak loud. So, they’re loud you can find a lot of exception out there. Given that we see the areas that we normally don’t see in the youngsters, that means they already start recruiting this compensatory network down the road, that might rain but it doesn’t necessarily indicate that they were going to definitely develop the dementia and some people ask me, you know, “I have a son who has a hearing loss I’m worried that you know down the road he will develop some type of dementia.” So, I have to convince them no no no, that you know, although we speculate that there’s a risk factor – this hearing loss is a risk factor – there’s no study, so it definitely needs a prospective follow up studies. I want to be really cautious about overinterpreting this.

Leigh: Yune and a team of five other researchers from across the US tested the hearing acuity of young adults 18 to 41 years of age, a group he refers to as “youngsters,” since so few people in this group experienced hearing loss. This led Ryan and I to ask him just how is hearing and its loss measured.

Lee: Auditory sensitivity can be measured in a few different ways. One of the most popular ways is doing what was called the PTA, pure tone audiometry. So, you basically gradually decrease the volume of certain frequencies of sound, like pure tone sound, until they don’t hear anything, and it’s done for specific frequency ranges. And, you know, there’s systematic way of figuring out what the dispersion is clinically falling into the category of normal range or mildly hearing loss or profound to severe hearing loss. There’s certain threshold; typically below 25 decibel is regarded as clinically normal range for youngsters and beyond 70 decibel does hearing loss just complete hearing loss or deaf, if you will.

Watkins: When people’s hearing is impaired, their brain sometimes compensates by shifting the language processing functions that are typically of the left hemisphere over to the right hemisphere. However, this can result in an increased cognitive demand on the right hemisphere, which has been associated with an increased risk of dementia. Because of this link between hearing loss and dementia, Doug and I were interested in learning about the extent to which people experience hearing loss.

Lee: Two percent of adults aged between 45 and 54 have some hearing loss, and the rate increases to maybe 8.5 percent for adults aged between 55 and 64. And people with higher hearing threshold, meaning that relatively poor hearing acuity, they tend to activate their right side of the brain. But, we’re looking at the youngsters, right? Aged between 18 and 41, and none of them are actually regarded as people with hearing loss all the subjects, 35 subjects, they’re all within the clinically normal hearing range. So, there’s no indication that they have hearing loss, they’re all falling into this less than 25 decibel threshold: clinically normal hearing range. So, this is not the population that you want to study the connection between hearing loss and some other cognitive impairment. Because their hearing is really sharp, they’re really good, in general.

Leigh: Next, Ryan and I wanted to know more about how the participants were presented with the auditory stimuli while they were inside of a functional magnetic resonance imaging – or fMRI machine – and also how this resulted in limiting the number of participants that could be included in the study.

Lee: This is sort of a two alternative forced choice task. So, the task is who is performing the action. So, for example, if the sentence is like boys that kiss girls are happy, it’s a boy who kissed the girls, right? So, it’s a boy, is a male, so the subject is asked to press the male button. If it’s a female, female button. So, if you don’t pay attention to this sentence that you’re hearing and you get a chance level performance. And we have four different runs, so four different blocks of fMRI scanning, and if they have chance level performance in any of those four runs, then we did decide to discard those subjects. And some subjects actually had a below chance level performance like 13 percent 29. The way that could happen is if they accidentally map out the button with opposite gender, right? So, 13 percent would be 87 percent. So, maybe the subject you know did a good job and understood, but then it just messed up the button press. Again, we couldn’t endorse that as a good data set, and that doesn’t happen to all the four runs, that opposite or below chance level performance: we saw that in some of the runs. So, we just weren’t quite sure. So, just to be sure, we decide to discard those four subjects and there’s one subject who moved the head more than ten millimeters in the run one and two. So, in fMRI as you may know, it’s very important to keep the head still as much as possible. If you move the head more than two millimeters then the data, there’s some problem there. But, this subject moved more than ten millimeters so there’s no question that we cannot use this data set. And two subjects we had to discard because we don’t have their working memory data. The reason being is those are the two subjects who participated in earlier in the study we used different working memory test, it’s called the automated working memory. We realized that that it took so long. I just couldn’t fit with the schedule of our study, so you know we couldn’t make use of that data set, unfortunately. So, we decided not to include those two subjects. And there are four subjects whose performance were just at chest level or even below chance, so yeah. So, that’s why there are a total of seven subjects we could not make use of.

Watkins: A main goal of the study was to examine the extent to which hearing acuity relates to people’s neural engagement during the comprehension of spoken sentences. We asked Yune to explain what he and his team found regarding this, as well as what other analyses they conducted that were related to those findings.

Lee: Our initial question was how the brain is modulated by the degree of complexity in sentences. So, we modulated the syntactic structure and we record the brain activity of all, you know, men and women are listening to those bunch of sentences in the magnet and we found what we expected to see. So, the brain obviously put more efforts when there’s increase of complexity in sentence structure. So, there are two different manipulations: one is adding this objective- or subjective-relative embedded clause, on other words adding what we call objective phrase. So, for adjective phrase, we actually didn’t find anything in the brain. So, that was to admit that kind of failure of the study design because we didn’t find any differential brain activity associated with this position of adjectives or phrase, but we did find differential brain activity associated with objective or subjective, sort of embedded clause.

Leigh: As it turned out, these findings weren’t the ones that most surprised Yune and his team, nor were they those that ended up garnering the attention of the popular media, as he explains after this short break.

Ad: We Share Science

Leigh: When we left off, we asked Yune to explain the unexpected results that he and his team found as well as their implications.

Lee: As an additional analysis we were looking at the effect of hearing differences in the youngsters. So, even if they’re all normal hearing range, clinically, we just, “hey, let’s see what would happen if we relate their differential hearing threshold, or hearing acuity, to the brain activity,” and to our surprise we found that the right frontal area – which is kind of homologous to the left language network – show up as significant cluster. It was modulated by hearing loss, and it was not modulated by the sentence structure, like objective or subjective. So, it is related to hearing loss, but it’s not responding to differential syntactic structure within a sentence. Yeah, and then we did some more confirmatory analysis, some of them were requested by reviewers and we were convinced that the right frontal area comes into play even with a very slight hearing decline. And then, somehow it tries to help out this language processing in youngsters.

Watkins: Like most researchers Yune and his team reported their findings in text and tables as well as through images, which are typically called figures in academic publishing, included among these figures were brain scans made while people listened to spoken sentences. Doug and I asked Yune how these interactive images are read, which as you listen, you can manipulate at parsing.science/tmap. That’s TMap.

Lee: TMap is basically after you do some t-test on a voxel by voxel basis. So, here what I mean by voxel is the basic unit of a neuroimaging data, just like a pixel in picture to the data. So, every voxel you have T statistics, T value. So, now let’s look at this sentence versus noise, sentence greater than noise. And then you see this bilateral activation in the temporal lobe. So, the biggest slice you’re seeing is what’s called the horizontal image, and you see that the site-by-site red activation, also the frontal activation. So, for this sentence worst noise – and I think it’s on threshold data – these frontal temporal areas are lighting up in response to sentence more greatly than to noise. So, the sentence engaged the language network and the auditory network more greatly than the noise, just simple noise sound. So, that’s what it does, and if you kind of hover around, you know click here and there, you see the number and XYZ at the bottom. So, XTZ is a coordinate of this new imaging data, and then right after that the number indicates the T value at that particular location, that coordinate. That means that the area somehow lighted up more for noise than for sentence.

Leigh: fMRI machines are notoriously loud. So, Ryan and I asked Yune how neuroscientists are able to test people’s hearing acuity while participants are inside of a scanner.

Lee: So, fMRI is in general sort of a hostile environment, because it generates really loud noise. But, that decibel level is almost like 110, which is comparable to jackhammer drilling sound. So, if you don’t put on the ear plug you actually hurt your ear. So, we as auditory neuroscientists at the auditory stimuli while we are scanning them, and then that main stimuli can be masked out by the loud scanning noise. So, the way we do is constantly turning on and off the scanner and when it’s off-state we present a sound and we quickly turn on the scanner and then it records the brain activity. So, what ISSS does is to create sort of a pocket of silent period where we present the sound and then we record a brain activity, like a few seconds later. So, that’s sort of an advanced technique from the conventional called the silent protocol. So, that’s what we call this little advanced technology. For the last year or so I’ve been developing even more advanced ISSS sequence, so that we have elongated this pocket from five second to ten second and so forth. So yeah, I think that the technology will continue to evolve so that we can study the brain activity associated with sound processing.

Watkins: Doug and I wondered if people’s comprehension of books – or any other written text – operates in the same way as when information is presented in an auditory fashion, as was done in Yune’s study.

Lee: The auditory spoken language, it unfolds over time and it vanishes, it disappears. So, you really have to maintain information in your what’s called a working memory portfolio system. And then you have to go back to relate what you just were a few seconds ago to what you’re hearing right now, or you’re listening to where as a wedding doesn’t have to go through that process. You can just revisit very quickly the text is still there, it doesn’t disappear. But, if you know they have limited time, if they don’t go back and forth or revisit what they just read, I think that the working memory in general should affect their comprehension. Because the information that you just had, if you don’t have a good working memory system, then that’s gone, then you struggle to relate or, you know, sort of understand the entire story. So yeah, that’s why this oral speech comprehension could be more challenging especially with all the people who go through this working memory decline, significantly.

Leigh: If a hearing aid or cochlear implant can help restore some people’s lost hearing, might such devices also potentially improve the cognitive processing among those whose hearing has been damaged? Here’s what Yune had to say in response to our question.

Lee: That is the hope that once we help them out by providing some hearing aids or CI, their brain starts to develop plasticity, so that the brain comes up with a way to compensate for some hearing loss by tapping into other cognitive resources. So, one study we’re designing right now is to train CI users, CI people – patients, on some working memory regimen and see if they benefit from it down the road. So that, you know, even if they permanently lose their hearing acuity and they have very minimal hearing sound, if they can develop some of the brain plasticity, they can compensate for their compromised hearing. Then, they can maintain their conversation. So, that is sort of our hope and some our research goal at the moment.

Watkins: fMRI scanners work by measuring the oxygenated hemoglobin – which contains iron – in the blood, and where blood flows in the brain can indicate what’s being activated in it. So, Doug and I asked how these kinds of brain scanners can be used for purposes other than brain imaging.

Lee: fMRI is a way of indirectly examining the neural activity by chasing the blood; and you know it’s been almost 30 years and a lot of validation studies try to look at the coupling between this, what’s called the BOLD signal – blood oxygen level dependent – signal and the neural activity, the what they call field potential, and it’s been confirmed. So, we tend to believe that if fMRI shows differential activity pattern, then, you know, the brain operates differently. So, even if it’s kind of crude measure, people came up with the idea of decoding in a human’s thoughts or humans perceptions by looking at fMRI data. The way it works is basically putting all the fMRI data into the new machine learning classifier so that it can decode the information of whether people seeing this picture or that picture, hearing this sound versus that sound, or whether someone’s doing addition or subtraction – if you’re interested in math study – or whether someone’s going to purchase car A versus car B, so that has been already done in the cognitive neuroscience field for the last decade. The consensus is that fMRI data is actually quite informative.

Leigh: Ryan and I are also interested in another device common to neuroscience research: electroencephalography or EEG machines. We asked Yune what the similarities and differences are between the instruments, as well as whether there are recent developments in brain imaging that we should be aware of.

Lee: They’re looking at a different aspect of neural processes. fMRI is looking at spatial map or spatial processing in the brain, whereas EEG is looking at the temporal processing associated with particular cognitive tasks. Both can be used to complement each other. So, there’s actually fMRI combined with EEG studies out there. Now, I mentioned at the beginning, I recently started using this fNIRS, which stands for functional near-infrared spectroscopy, which you can think with as a mini version of fMRI. It works exactly the same as fMRI; the principle is the same, but is sending light to the brain. It hits the haemoglobin, and it gives us the idea of what areas in the brain consumes more oxygen than the other areas. So, it’s been around quite some time but recently they really went through the technical breakthrough. So, we’re about to use this new device for studies with seniors and patients and some children. So, this device is very compatible with those population. Because of fMRI you can’t study people with the CI or people with a pacemaker, because they have metal in their body you can’t put them in the magnet because it gets sucked up to the scanner. It’s a very dangerous. So, that’s a one big advantage of fNIRS that allows us to study people’s sort of a brain activity while they are performing the tasks in their naturalustic position. So, some interesting use of this is for my own music study. So, when people doing this sort of a deal draw me or you know dual singing – or it can be also used for communication, back and forth of a conversation. So yeah, I’m looking forward to using this.

Watkins: That was Yune Lee discussing his article, “Differences in hearing acuity among normal hearing young adults, modulate the neural basis for speech comprehension,” which he published with five other researchers in the May June 2018 issue of eNeuro. You’ll find a link to their paper on parsingcience.org/e30, along with bonus content and other material that he discussed during the episode.

Leigh: That we just launched it two weeks ago, Parsing Science’s weekly newsletter has been a big hit. You can sign up at: parsingscience.org/newsletter, or if you’d like to first check out our inaugural issue go to: parsing.science/n1. We’ve also got a limited number of Parsing Science stickers available. So, if you’d like one, just let us know where to send it and we’ll mail one to the next 17 newsletter subscribers for free.

Watkins: Next time on Parsing Science we will be joined by Adrian Dyer from RMIT University and Monash University, both located in Victoria Australia. He’ll talk with us about his experimental research showing that wild honey bees may be able to understand the concept of zero.

Adrian Dyer: So far we already know the abilities been demonstrated in humans, some other primates, a parrot, and now the honey bee. So, it’s a pretty big breakthrough.

Watkins: We hope that you will join us again.

Ryan and I are also interested in another device common to neuroscience research electroencephalography or EEG machines. We asked Yune what the similarities and differences are between the instruments as well as whether there are recent developments in brain imaging that we should be aware of.@rwatkins says:

fMRI scanners work by measuring the oxygenated hemoglobin which contains iron in the blood, and where blood flows in the brain can indicate what's being activated in it. So, Doug and I asked how these kinds of brain scanners can be used for purposes other than brain imaging.@rwatkins says:

If a hearing aid or cochlear implant can help restore some people's lost hearing, might such devices also potentially improve the cognitive processing among those whose hearing has been damaged. Here's what Yune had to say in response to our question.@rwatkins says:

Doug and I wondered if people's comprehension of books or any other written text operates in the same way as when information is presented in an auditory fashion as was done in Yune’s study.@rwatkins says:

fMRI machines are notoriously loud. So, Ryan and I asked Yune how neuroscientists are able to test people's hearing acuity while participants are inside of a scanner.@rwatkins says:

Like most researchers Yune and his team reported their findings in text and tables as well as through images which are typically called figures in academic publishing, included among these figures were brain scans made while people listen to spoken sentences. Doug and I asked Yune how these interactive images are read, which as you listen, you can manipulate at parsing.science/tmap. That's TMap.@rwatkins says:

When we left off, we asked Yune to explain the unexpected results that he and his team found as well as their implications.@rwatkins says:

As it turned out, these findings weren't the ones that most surprised Yune and his team, nor were they those that ended up garnering the attention of the popular media, as he explains after this short break.@rwatkins says:

The main goal of the study was to examine the extent to which hearing acuity relates to people's neural engagement during the comprehension of spoken sentences. We asked Yune to explain what he and his team found regarding this as well as what other analyses they conducted that were related to those findings.@rwatkins says:

Next, Ryan and I wanted to know more about how the participants were presented with the auditory stimuli while they were inside of a functional magnetic resonance imaging or fMRI machine, and also how this resulted in limiting the number of participants that could be included in the study.@rwatkins says:

When people's hearing is impaired, their brain sometimes compensates by shifting the language processing functions that are typically of the left hemisphere over to the right hemisphere. However, this can result in an increased cognitive demand on the right hemisphere, which has been associated with an increased risk of dementia. Because of this link between hearing loss and dementia, Doug and I were interested in learning about the extent to which people experience hearing loss.@rwatkins says:

Yune and a team of five other researchers from across the US tested the hearing acuity of young adults 18 to 41 years of age. A group he refers to as youngsters, so few people in this group experienced hearing loss. This led Ryan and I to ask him just how is hearing and its loss measured.@rwatkins says:

Doug and I were also curious to learn what it is that's knowing about the degree to which hearing loss is linked to dementia.@rwatkins says:

Yune is also a musician and previously was a commercial music director. However, after stumbling on to research regarding music processing in the brain, he decided to return to school completing his PhD in cognitive neuroscience at Dartmouth College. Ryan and I wondered what led to his interest in studying differences between younger and older adults’ hearing ability.